How Dating Sites and Apps Use Algorithms to Automate Racial Bias

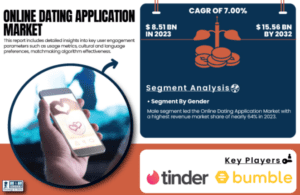

In online dating, platforms like Tinder, Bumble, and OkCupid have revolutionized how we connect romantically. Despite their benefits, these dating apps also face significant criticisms, particularly regarding how they embed and perpetuate racial biases. In this comprehensive analysis, I delve into the ways these dating sites unwittingly automate racism through their algorithms and user interfaces, and what measures could potentially turn the tide towards more equitable digital love.

Understanding the Role of Algorithms in Matchmaking

The Basics of Dating Site Algorithms

Most dating apps operate on complex algorithms that match users based on shared interests, geographical proximity, and user preferences. While this might seem efficient, these algorithms can also amplify societal biases. For instance, if a significant percentage of users prefere not to see certain racial groups, the algorithm may learn to deprioritize profiles of people from those groups in match suggestions across the platform. This not only affects the visibility of minority users but also limits the diversity of the dating pool, perpetuating cycles of exclusion.

Data Interpretation and Its Consequences

How dating platforms interpret and leverage user data plays a critical role in shaping the dating landscape. For example, data on user behavior—such as swiping patterns and interaction rates—feeds back into the system, which then seeks to optimize user engagement by reinforcing these patterns. This often results in an echo chamber where stereotypes and racial preferences are maintained rather than challenged, leading to a homogenized user experience that marginalizes minority profiles.

Recognizing Bias in User Preferences and Filters

The Impact of User Selected Filters

The personalization features in many dating apps allow users to filter potential matches based on race, ethnicity, and other demographics. While marketed as user preference, this capability can facilitate racial segregation. The ethical dilemma arises when these preferences lead to systematic exclusion, which is evident in the reduced match rates and interactions for profiles of Black women and Asian men, as reported by several user feedback studies and data analytics reports from within the industry.

How Racial Biases Are Coded into Dating Apps

Dating sites inadvertently program racial biases into their systems by normalizing the idea that racial preferences are mere attraction filters. By not challenging these user preferences and sometimes even asking users to specify their race or ethnicity in detailed profiles, platforms create an environment where racism is just a checkbox away. This is not only a technical oversight but a moral failure to address how deeply ingrained societal biases can influence technological implementations.

The Societal Impact of Racial Discrimination in Online Dating

Emotional Toll on Minority Users

The impact of experiencing racism on dating sites extends beyond missed connections. For minority users who are repeatedly de-prioritized, the constant rejection can lead to significant psychological distress. Studies indicate a decrease in self esteem and an increase in feelings of alienation among users who feel discriminated against based on their racial or ethnic identities, which can have long lasting effects on their overall mental health and willingness to engage in social platforms.

Reinforcing Stereotypes and Social Divides

Dating apps that allow the perpetuation of racial stereotypes contribute to wider social divides. By enabling users to select or reject potential matches based on race, these platforms reinforce the idea that racial preferences are normal and acceptable, thereby institutionalizing racism and hampering cross cultural understanding and respect.

Investigating Real World Examples and Solutions

Case Studies Highlighting Algorithmic Discrimination

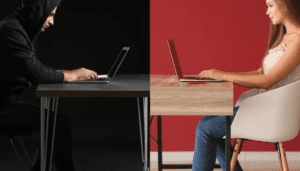

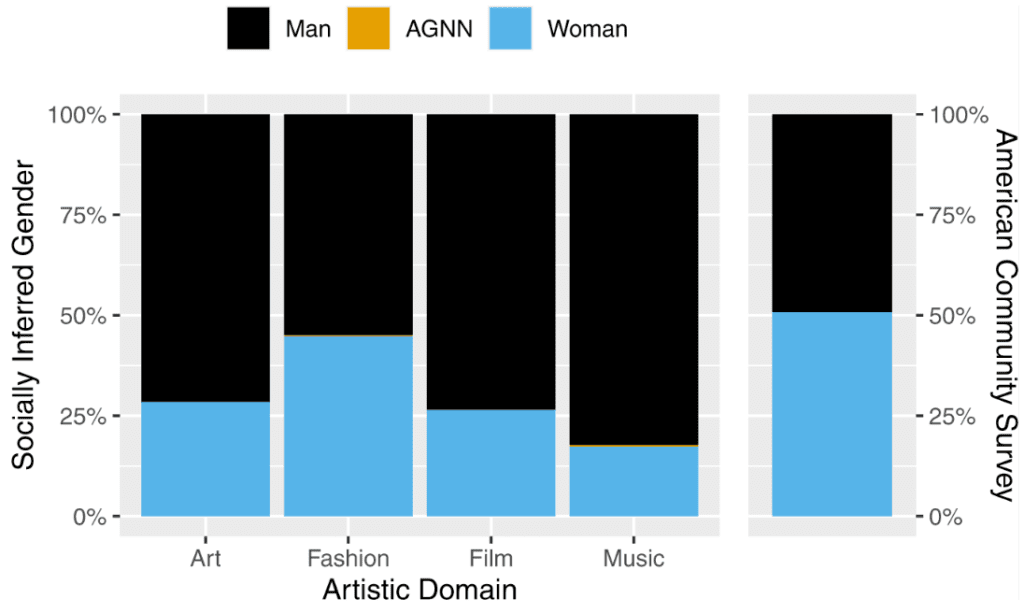

One revealing study conducted on major dating platforms showed how algorithms amplified existing biases. The data suggested that profiles of Asian men and Black women were systematically rated lower in perceived attractiveness and compatibility scores, reducing their visibility and chances of making meaningful connections. These findings have prompted a few platforms to reconsider how profile attractiveness is measured and displayed.

Steps Towards Inclusive and Fair Matchmaking

In response to widespread criticism, some dating sites have begun to remove or redesign racial filters and are reevaluating the ethical implications of their data practices. Initiatives to increase awareness of racial biases and encourage more inclusive interactions are also being implemented, with new guidelines for developers on creating algorithms that promote diversity and fairness.

- Remove Racial Filters: Eliminating the option to filter potential matches by race or ethnicity is a crucial step in reducing systemic racism on dating platforms. This action would prevent users from excluding entire groups based on racial preferences, promoting a more inclusive environment.

- Revise Algorithm Design: Dating apps should reevaluate and redesign their algorithms to avoid incorporating user biases that lead to discriminatory outcomes. For example, modifying algorithms to de-emphasize racial preferences in match suggestions can help reduce the perpetuation of stereotypes and biases.

- Increase Transparency: Platforms can be more open about how their algorithms work and how data is used to match profiles. Transparency can build trust and allow users to understand and question the processes that influence their matchmaking experiences.

- Promote Diversity in Advertising: Dating sites should ensure their marketing materials reflect a diverse range of members. This not only appeals to a broader audience but also sets a normative standard for diversity and inclusion within the platform.

- Bias Awareness Training: Implement training programs for developers and staff that focus on understanding and mitigating unconscious biases in coding and data analysis. This can help ensure that new features and algorithms are designed with fairness in mind.

- User Education Campaigns: Launch campaigns to educate users about the negative impacts of racial discrimination and encourage open-mindedness in dating. Education can help shift user attitudes and reduce the demand for discriminatory features like racial filters.

- Community Feedback Systems: Create mechanisms for feedback from users, especially from minority groups, about their experiences on the platform. This feedback can be crucial in identifying issues and making improvements.

- Regular Audits for Bias: Conduct regular audits of matchmaking outcomes to identify any patterns of bias or discrimination against certain user groups. These audits can guide ongoing adjustments to algorithms and policies.

- Partner with Advocacy Groups: Collaborate with civil rights and advocacy organizations to develop best practices for equitable matchmaking. These partnerships can provide valuable insights into the needs and experiences of diverse communities.

- Inclusive Design Practices: Incorporate inclusive design principles from the ground up when developing new features or overhauling existing systems. This includes considering the potential impacts on all users, regardless of race, gender, or ethnicity.

Legal and Ethical Considerations in Modern Matchmaking

The Role of Legislation in Curbing Discrimination

The legal framework surrounding racial discrimination on dating apps is still evolving. In some jurisdictions, legislation is being considered that would classify the enabling of racial filters on dating platforms as a form of discrimination, similar to housing or employment, requiring dating sites to adhere to stricter equality standards.

Ethical Responsibilities of Dating Platforms

Dating app developers and platforms have a moral obligation to dismantle racial biases within their systems. It’s not just about compliance with emerging laws but about taking proactive steps to ensure their technologies promote genuine social integration. This involves a commitment to continuous improvement and transparency in how user data is used to shape romantic landscapes.

Conclusion

Navigating the complexities of dating in the digital age necessitates a robust confrontation with the automated mechanisms that perpetuate racial discrimination. By understanding and addressing these issues, we can help move towards a more inclusive and equitable digital dating environment. My exploration provides a detailed roadmap for innovation and ethical engagement in the digital dating industry, aimed at ensuring fairness and respect for all users.

FAQ: Understanding Racial Bias in Dating Apps

Q1: How do dating sites contribute to racial bias?

A1: Dating sites and apps like Tinder, Bumble, and OkCupid may unintentionally automate racism through their algorithms and user interface designs. By allowing users to filter potential matches based on race and relying on user behavior to shape match suggestions, these platforms can perpetuate and even amplify existing racial stereotypes and preferences.

Q2: What are racial filters, and why are they problematic?

A2: Racial filters in dating apps allow users to include or exclude potential matches based on their race or ethnicity. These filters are problematic because they enable and normalize racial segregation and discrimination, undermining the principle of equality and reducing the dating pool for minority users, which perpetuates feelings of rejection and exclusion.

Q3: Can altering dating app algorithms reduce racial bias?

A3: Yes, by redesigning the algorithms that suggest matches, dating platforms can reduce the impact of user biases on the matchmaking process. This involves modifying algorithms to not prioritize racial preferences and to promote a more diverse range of profiles to users, which can help break down stereotypes and encourage more inclusive interactions.

Q4: What steps can dating sites take to become more inclusive?

A4: Dating sites can take several steps to promote inclusivity, such as removing racial filters, increasing transparency about how their algorithms work, conducting regular audits for bias, and providing bias awareness training for their developers and staff. Additionally, engaging with community feedback and partnering with advocacy groups can help address specific concerns and improve practices.

Q5: How does racial bias in dating apps affect users?

A5: Racial bias in dating apps can lead to a range of negative outcomes for minority users, including lower visibility, fewer interactions, and potentially experiencing direct or indirect discrimination. This not only affects their chances of finding a match but can also have broader psychological impacts, such as decreased self esteem and increased feelings of alienation.

Q6: Are there legal implications for racial bias in dating apps?

A6: Yes, there can be legal implications for dating platforms if their design or functionality leads to systematic discrimination. As awareness of digital discrimination grows, legislators and regulators in various jurisdictions may consider laws and regulations that treat racial bias in dating apps similarly to discrimination in employment or housing.

Q7: How can users advocate for less biased dating platforms?

A7: Users can advocate for less biased dating platforms by providing feedback to the platforms, participating in community discussions, and supporting initiatives that promote diversity and inclusivity. Additionally, users can choose to support dating apps that demonstrate a commitment to combating racial bias and promoting fair treatment for all users.

Q8: How do dating apps sort profiles and what impact does this have on racial prejudice?

A8: Many dating apps use algorithms to sort profiles based on user preferences and behavior. This sorting can reinforce racial prejudice by promoting profiles that align with popular or majority preferences, which often exclude or marginalize minority groups, including Asian women and other races perceived as less attractive.

Q9: Is there a tendency for dating apps to uphold racist stereotypes in their matching processes?

A9: Unfortunately, yes. Some dating apps can uphold racist stereotypes through the way they design their matching algorithms. By allowing users to select, prefer, or reject potential matches based on racial or ethnic categories, these platforms may inadvertently support and perpetuate stereotypes, thereby influencing who users want to date based on biased notions of racial or sexual desirability.